10 Best No-Code Web Scrapers for Effortless Data Extraction in 2025

Expert in Web Scraping Technologies

Key Takeaways:

- No-code web scrapers empower users without programming skills to extract data efficiently.

- These tools simplify complex scraping tasks, including handling dynamic content and anti-bot measures.

- The market for no-code web scraping is rapidly growing, with AI-driven solutions leading the innovation.

- Choosing the right tool depends on your specific needs, budget, and the complexity of the target websites.

- Scrapeless offers a powerful, automated no-code solution for reliable and scalable data extraction.

Introduction: The Rise of No-Code Data Extraction

In an increasingly data-driven world, the ability to collect and analyze information from the web is paramount. However, traditional web scraping often requires programming knowledge, presenting a barrier for many businesses, researchers, and individuals. This is where no-code web scrapers step in, democratizing data extraction by allowing users to effortlessly gather information without writing a single line of code. These intuitive tools leverage visual interfaces, pre-built templates, and intelligent automation to navigate websites, identify data points, and extract them into structured formats. The no-code movement is transforming how we interact with web data, making it accessible to a broader audience and accelerating insights. This guide will explore the 10 best no-code web scrapers available in 2025, detailing their features, benefits, and ideal use cases. We will also highlight how a comprehensive platform like Scrapeless can provide an unparalleled solution for effortless and reliable data extraction, especially when dealing with complex or large-scale projects.

What is a No-Code Web Scraper?

A no-code web scraper is a software tool that allows users to extract data from websites without writing any programming code. These tools typically feature intuitive graphical user interfaces (GUIs) where users can visually select the data they want to extract, define scraping rules, and configure data output formats. The underlying complexity of web scraping, such as handling HTML parsing, JavaScript rendering, pagination, and anti-bot measures, is abstracted away by the tool. This empowers individuals and organizations without technical expertise to perform sophisticated data collection tasks, significantly lowering the barrier to entry for web data acquisition. The market for no-code web scraper tools is experiencing rapid growth, with projections indicating a substantial increase in market size by 2025, reaching USD 1.03 billion and potentially doubling by 2030 [7]. This growth is fueled by the increasing demand for data across various industries and the desire for faster, more accessible data extraction methods.

Why Choose No-Code Web Scrapers?

No-code web scrapers offer numerous advantages that make them an attractive option for a wide range of users:

- Accessibility: They democratize web data, making it available to marketers, business analysts, researchers, and small business owners who may lack coding skills.

- Speed and Efficiency: Data extraction can be set up and executed much faster compared to writing custom code, accelerating time-to-insight. Some reports suggest an 89% reduction in time-to-data compared to custom development [8].

- Cost-Effectiveness: By eliminating the need for developers, no-code tools can significantly reduce operational costs associated with data collection. They can be up to 76% cheaper than traditional IT solutions [8].

- Ease of Use: Visual interfaces and drag-and-drop functionalities simplify the process of defining scraping rules.

- Maintenance: Many platforms handle infrastructure, updates, and anti-bot measures, reducing the maintenance burden on users.

- Focus on Data: Users can concentrate on what data they need and how to use it, rather than on the technicalities of extraction.

The 10 Best No-Code Web Scrapers for Effortless Data Extraction in 2025

Here's a curated list of the top no-code web scrapers that stand out in 2025, offering robust features for various data extraction needs. Each tool brings unique strengths to the table, catering to different levels of complexity and user requirements. These tools are revolutionizing how businesses and individuals approach data collection, making it more efficient and accessible than ever before.

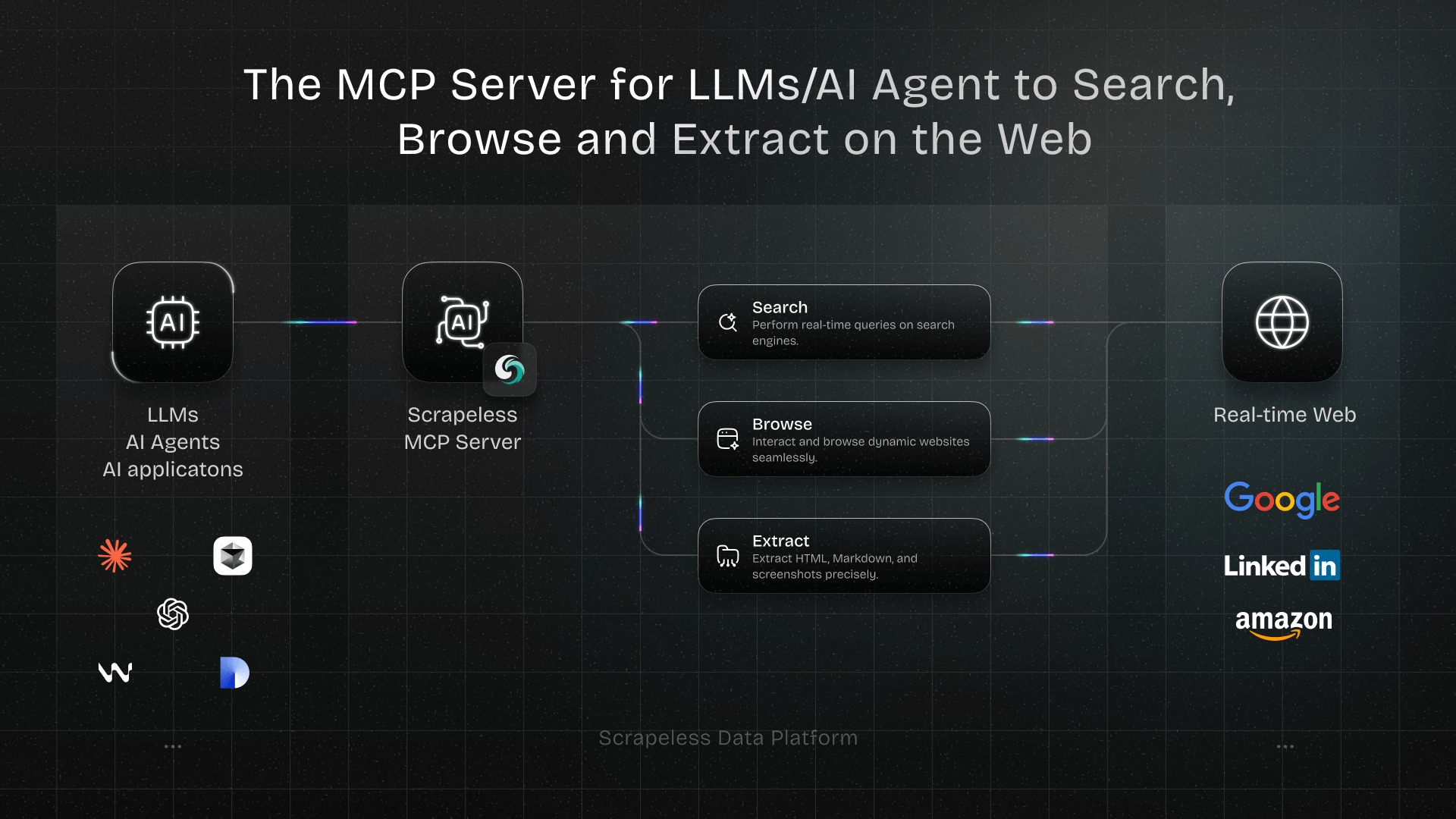

1. Scrapeless: The AI-Powered Data Extraction Platform

Scrapeless is an advanced, AI-driven web scraping platform designed to provide effortless data extraction, especially from complex and dynamic websites. It stands out by abstracting away the technical challenges of web scraping, offering a truly no-code experience for users. With Scrapeless, you don't need to worry about JavaScript rendering, CAPTCHA solving, proxy management, or anti-bot bypasses; the platform handles it all automatically. This makes it an ideal solution for businesses and individuals who need reliable, scalable, and high-quality data without the overhead of managing a scraping infrastructure. Scrapeless is not just a tool; it's a comprehensive service that ensures you get the data you need, when you need it, with minimal effort.

Key Features:

- AI-Powered Data Extraction: Intelligently identifies and extracts data based on your requirements, even from unstructured sources.

- Automatic Anti-Bot Bypass: Seamlessly handles CAPTCHAs, IP blocks, and other anti-scraping mechanisms.

- JavaScript Rendering: Fully renders dynamic websites, ensuring all content is accessible.

- Proxy Management: Automatically rotates proxies to maintain anonymity and avoid detection.

- Scalability: Designed for large-scale data extraction, capable of handling millions of requests.

- User-Friendly Interface: Provides an intuitive platform for defining scraping tasks and managing data.

Pros:

- Extremely easy to use, requiring no coding skills.

- Highly reliable for complex and dynamic websites.

- Automates most common scraping challenges.

- Excellent for large-scale data projects.

- Focuses on delivering clean, structured data.

Cons:

- May have a learning curve for advanced configurations for new users.

- Pricing might be higher than basic free tools for very small projects.

Ideal for: Businesses, data analysts, and researchers who need reliable, scalable, and hassle-free data extraction from any website, especially those with strong anti-scraping measures or dynamic content. It's the go-to for effortless data extraction.

2. Octoparse: Visual Web Scraping for Everyone

Octoparse is a popular no-code web scraper known for its visual point-and-click interface, making it accessible to users of all skill levels. It allows you to create scraping workflows by simply clicking on the data you want to extract from a webpage. Octoparse handles various website structures, including dynamic content, pagination, and login-protected sites. It offers both cloud-based and local scraping options, providing flexibility for different project needs. Octoparse is widely recognized for its user-friendly design and robust feature set, making it a strong contender for effortless data extraction [4].

Key Features:

- Point-and-Click Interface: Visually select data fields and define extraction rules.

- Cloud Platform: Run scrapers in the cloud, eliminating the need for local resources.

- Scheduled Scraping: Automate data collection at regular intervals.

- IP Rotation: Helps bypass IP blocking and maintain anonymity.

- Template Library: Offers pre-built templates for popular websites.

- API Access: Integrate extracted data into other applications.

Pros:

- Very easy to learn and use for beginners.

- Handles complex websites with dynamic content and pagination.

- Cloud service allows for continuous scraping without local machine involvement.

- Good customer support and community resources.

Cons:

- Can be resource-intensive for very large projects on local machines.

- Free plan has limitations on features and data volume.

- May require some trial and error for highly customized scraping tasks.

Ideal for: Small to medium businesses, marketers, and data analysts who need to extract structured data from various websites without coding. It's particularly good for those who prefer a visual approach to building scrapers.

3. ParseHub: Powerful and Flexible Visual Scraper

ParseHub is another highly-rated no-code web scraper that provides a powerful visual interface for data extraction. It excels at handling complex websites, including those with JavaScript, AJAX, redirects, and login forms. ParseHub allows users to define intricate scraping logic, such as handling infinite scrolling, clicking through pagination, and extracting data from nested elements. Its desktop application offers a high degree of control and flexibility, making it suitable for more advanced scraping projects where a visual approach is still preferred. ParseHub is a robust tool for effortless data extraction from challenging web sources.

Key Features:

- Visual Selection: Point-and-click to select and extract data.

- Complex Interactions: Supports infinite scrolling, pagination, forms, and dropdowns.

- Desktop Application: Provides a dedicated environment for building and testing scrapers.

- Cloud-Based Execution: Run projects on ParseHub's servers for scalability.

- API & Webhooks: Integrate extracted data with other systems.

- Regex Support: For advanced pattern matching and data cleaning.

Pros:

- Handles highly complex and dynamic websites effectively.

- Offers a high level of customization for scraping logic.

- Reliable cloud infrastructure for running projects.

- Good for recurring scraping tasks.

Cons:

- Steeper learning curve compared to simpler tools.

- Free plan is quite limited in terms of projects and data.

- Requires desktop installation.

Ideal for: Data professionals, researchers, and businesses that need to extract data from complex, dynamic websites and require a high degree of control over the scraping process, all within a no-code visual environment.

4. Apify: The Web Scraping and Automation Platform

Apify is a versatile platform that offers a wide range of tools for web scraping and automation, including many no-code and low-code solutions. It provides a vast library of pre-built

Actors (ready-to-use scraping solutions) that can be run without any coding. Apify is particularly strong for large-scale data extraction, offering robust features for proxy management, anti-bot bypass, and cloud execution. While it also supports custom code for more advanced users, its extensive collection of no-code Actors makes it a powerful choice for effortless data extraction. Apify is a comprehensive platform for all your web scraping needs.

Key Features:

- Actors: A marketplace of pre-built, customizable scraping and automation solutions.

- Cloud Platform: Run and scale scrapers in the cloud with robust infrastructure.

- Proxy Management: Built-in proxy rotation and management.

- Anti-Bot Bypass: Advanced capabilities to handle anti-scraping technologies.

- Scheduler: Automate tasks to run at specified intervals.

- API & Webhooks: Seamless integration with other applications.

Pros:

- Highly scalable for large-volume data extraction.

- Extensive library of ready-to-use solutions (Actors).

- Excellent for complex scraping tasks and dynamic websites.

- Strong community and support.

Cons:

- Can be overwhelming for absolute beginners due to its vast feature set.

- Pricing can become significant for very high usage.

Ideal for: Developers, data scientists, and businesses looking for a scalable and flexible platform that offers both no-code solutions for quick tasks and the option for custom code for highly specific requirements. It's perfect for effortless data extraction at scale.

5. Bright Data Web Scraper IDE: Enterprise-Grade Data Collection

Bright Data is a leading provider of web data platform services, and their Web Scraper IDE offers a powerful no-code/low-code solution for data extraction. It's designed for enterprise-level data collection, providing robust infrastructure, advanced proxy networks, and sophisticated anti-blocking capabilities. While it offers a visual interface for defining scraping logic, it also provides the flexibility for more technical users to customize their scrapers with code. Bright Data's focus is on delivering high-quality, reliable data at scale, making it a top choice for businesses with demanding data needs. It ensures effortless data extraction even from the most challenging websites.

Key Features:

- Visual Editor: Point-and-click interface for defining scraping rules.

- Advanced Proxy Network: Access to a vast pool of residential, datacenter, and mobile proxies.

- Anti-Blocking Mechanisms: Sophisticated technology to bypass CAPTCHAs and other anti-bot measures.

- Cloud Infrastructure: Scalable cloud execution for large projects.

- Data Delivery Options: Various formats and delivery methods (API, Webhooks, S3, GCS).

- Dedicated Support: 24/7 support for enterprise clients.

Pros:

- Extremely reliable for large-scale and complex scraping tasks.

- Industry-leading proxy network and anti-blocking solutions.

- Flexible for both no-code and low-code users.

- High-quality data output.

Cons:

- Can be more expensive than other tools, especially for smaller projects.

- The platform's extensive features might have a learning curve.

Ideal for: Enterprises and large organizations that require high-volume, high-quality, and reliable data extraction from challenging websites, with a focus on effortless data extraction and robust infrastructure.

6. Web Scraper (Chrome Extension): Browser-Based Simplicity

Web Scraper is a popular Chrome extension that allows users to build sitemaps (scraping configurations) directly within their browser. It's a truly no-code solution that integrates seamlessly into your browsing experience. You can visually select elements, define navigation paths, and extract data without leaving the webpage. While it's limited to running within the Chrome browser, its ease of use and accessibility make it an excellent choice for beginners and those with smaller, ad-hoc scraping needs. It's a straightforward tool for effortless data extraction directly from your browser.

Key Features:

- Browser Extension: Integrates directly into Chrome.

- Point-and-Click Selector: Visually define data points and navigation.

- Sitemap Creation: Build and manage scraping configurations.

- Data Export: Export data in CSV, XLSX, or JSON formats.

- Pagination & Dynamic Content: Basic support for these features.

Pros:

- Extremely easy to get started and use.

- No software installation required beyond the browser extension.

- Free for basic usage.

- Good for quick, small-scale scraping tasks.

Cons:

- Limited to Chrome browser.

- Not suitable for very large-scale or complex projects.

- Relies on your local machine's resources.

- Less robust for advanced anti-bot measures.

Ideal for: Individuals, students, and small businesses who need to perform occasional, simple web scraping tasks directly from their browser without any coding. It's a great entry point for effortless data extraction.

7. Browse AI: AI-Powered Visual Scraper

Browse AI is a modern no-code web scraper that leverages artificial intelligence to simplify data extraction. It allows users to train a

robot (or

robot (or "extractor") by demonstrating the desired data points and interactions. The AI then learns to replicate these actions across similar pages, making it highly effective for dynamic and visually complex websites. Browse AI focuses on ease of use and automation, allowing users to set up monitors for website changes and receive data updates automatically. It's a powerful tool for effortless data extraction with minimal manual intervention.

Key Features:

- AI-Powered Training: Train extractors by simply showing the tool what data to collect.

- Monitor Websites: Automatically track changes on websites and get notified or receive updated data.

- Cloud-Based: Runs in the cloud, no local installation required.

- API & Webhooks: Integrate extracted data into your workflows.

- Screenshot Capture: Capture screenshots of the scraped pages.

Pros:

- Extremely intuitive for non-technical users.

- Excellent for dynamic content and complex layouts due to AI capabilities.

- Automated monitoring saves time and effort.

- Good for recurring data collection tasks.

Cons:

- May require some initial training time for complex scenarios.

- Pricing can be a factor for high-volume usage.

Ideal for: Marketers, sales teams, and business analysts who need to monitor competitor websites, track product prices, or collect leads without any coding. It's perfect for effortless data extraction from visually rich sites.

8. ScrapeHero: Managed Services and Self-Service Platform

ScrapeHero offers a hybrid approach to no-code web scraping, providing both a self-service platform and managed scraping services. Their self-service platform allows users to build scrapers using a visual interface, similar to other tools on this list. However, for more complex or large-scale projects, their managed services team can build and maintain custom scrapers for you. This flexibility makes ScrapeHero a robust option for businesses that need reliable data extraction but prefer to offload the technical complexities. It's a versatile solution for effortless data extraction, catering to different levels of technical involvement.

Key Features:

- Visual Scraper Builder: Point-and-click interface for creating scrapers.

- Managed Services: Option for ScrapeHero to build and maintain custom scrapers.

- Cloud-Based: Run scrapers on their infrastructure.

- API Access: Integrate data into your applications.

- Data Delivery: Various formats and delivery options.

Pros:

- Flexible options for self-service or fully managed scraping.

- Handles complex websites and large data volumes.

- Reliable and scalable infrastructure.

- Good for businesses that need a hands-off approach.

Cons:

- Self-service platform might have a learning curve.

- Managed services can be more expensive.

Ideal for: Businesses of all sizes that need reliable web data but want the flexibility to choose between building their own scrapers or having a team manage it for them. It's a strong contender for effortless data extraction with professional support.

9. WebAutomation: Ready-Made Scrapers and Custom Solutions

WebAutomation is a platform that specializes in providing ready-made scrapers for popular websites, allowing users to extract data instantly without any setup. They offer a vast library of pre-built extractors for e-commerce, social media, news, and more. Additionally, users can request custom scrapers or build their own using a visual editor. This combination of instant solutions and customization options makes WebAutomation a convenient choice for users who need quick access to data from common sources or have specific, recurring scraping needs. It aims to provide effortless data extraction through its diverse offerings.

Key Features:

- Ready-Made Scrapers: Instant access to data from popular websites.

- Custom Scraper Builder: Visual tool to create your own scrapers.

- Cloud-Based: All scraping runs on their cloud infrastructure.

- Scheduled Runs: Automate data collection.

- Data Export: Various export formats (CSV, JSON, Excel).

Pros:

- Extremely fast data acquisition for popular sites using ready-made scrapers.

- No technical knowledge required for pre-built solutions.

- Good for recurring data needs from specific sources.

- Affordable pricing for many use cases.

Cons:

- Ready-made scrapers might not cover all niche websites.

- Custom scraper building still requires some effort.

Ideal for: Small businesses, marketers, and individuals who frequently need data from well-known websites or require a quick, cost-effective solution for specific data extraction tasks. It's a great choice for effortless data extraction from common sources.

10. DataGrab: Simple and Effective Data Extraction

DataGrab is a straightforward no-code web scraper designed for simplicity and effectiveness. It focuses on providing an easy-to-use interface for extracting structured data from websites without unnecessary complexities. Users can quickly set up scraping tasks, define the data points they need, and export the results in common formats. While it may not offer the same level of advanced features as some enterprise-grade solutions, DataGrab excels at providing a reliable and accessible tool for basic to moderately complex scraping needs. It's a solid option for effortless data extraction for users prioritizing simplicity.

Key Features:

- Intuitive Interface: Easy to navigate and set up scraping tasks.

- Visual Data Selection: Point-and-click to identify data elements.

- Scheduled Scraping: Automate data collection.

- Data Export: Supports CSV, JSON, and Excel.

- Cloud Execution: Runs scrapers in the cloud.

Pros:

- Very easy to learn and use for beginners.

- Good for extracting structured data from most websites.

- Focuses on core scraping functionality without overwhelming features.

- Cost-effective for many users.

Cons:

- May struggle with highly dynamic or complex websites.

- Fewer advanced features compared to other tools.

Ideal for: Individuals and small teams who need a simple, reliable, and cost-effective no-code solution for extracting structured data from websites without extensive technical knowledge. It's a good choice for effortless data extraction for everyday needs.

Comparison Summary: Choosing Your Ideal No-Code Web Scraper

Selecting the best no-code web scraper depends heavily on your specific needs, the complexity of the websites you intend to scrape, your budget, and your desired level of automation. This comparison table provides a quick overview of the top 10 tools discussed, highlighting their key features and ideal use cases to help you make an informed decision. Understanding these distinctions is crucial for achieving effortless data extraction that aligns with your project goals.

| Feature/Tool | Ease of Use | Dynamic Content Handling | Anti-Bot Bypass | Scalability | Pricing Model | Ideal Use Case |

|---|---|---|---|---|---|---|

| Scrapeless | Very High | Excellent | Automated | Very High | Subscription | Complex, large-scale, reliable data extraction |

| Octoparse | High | Good | Basic | High | Freemium | Visual scraping, scheduled tasks |

| ParseHub | Medium | Excellent | Basic | High | Freemium | Complex websites, detailed control |

| Apify | Medium | Excellent | Advanced | Very High | Freemium | Large-scale, flexible (no-code/low-code) |

| Bright Data Web Scraper IDE | Medium | Excellent | Advanced | Very High | Subscription | Enterprise-level, high-volume, reliable data |

| Web Scraper (Chrome Ext.) | Very High | Basic | Manual | Low | Free/Premium | Quick, small-scale, browser-based tasks |

| Browse AI | High | Excellent | Automated | Medium | Freemium | AI-powered, website monitoring, visual data |

| ScrapeHero | Medium | Good | Advanced | High | Subscription | Hybrid (self-service/managed), reliable data |

| WebAutomation | High | Good | Basic | Medium | Freemium | Ready-made scrapers, quick data from popular sites |

| DataGrab | High | Medium | Basic | Medium | Freemium | Simple, effective, cost-effective data extraction |

Case Studies and Application Scenarios: No-Code Scraping in Action

No-code web scrapers are transforming various industries by enabling rapid data acquisition for diverse applications. These tools are not just for tech-savvy individuals; they are empowering business users to gain insights and automate workflows that were previously out of reach. Here are a few compelling case studies and application scenarios demonstrating the power of effortless data extraction:

-

E-commerce Product Research: An online retailer wants to analyze competitor pricing, product features, and customer reviews across multiple e-commerce platforms. Using a no-code tool like Octoparse or ParseHub, a marketing analyst can visually select product names, prices, descriptions, and review scores from competitor websites. They can set up scheduled runs to collect this data daily or weekly, allowing them to identify market trends, optimize their own pricing strategies, and discover new product opportunities without writing any code. This leads to more informed business decisions and a competitive edge [4].

-

Lead Generation for Sales Teams: A sales team needs to build a targeted list of potential clients from online directories or professional networking sites. With a tool like Browse AI, a sales representative can train an extractor to visit relevant company profiles, extract contact information (names, emails, company sizes), and even monitor for new additions to these directories. The AI-powered approach handles variations in website layouts, ensuring consistent data extraction. This automates a traditionally time-consuming task, allowing the sales team to focus on engagement rather than manual data collection, significantly boosting their productivity and lead quality.

-

Content Aggregation for News & Media: A media company wants to aggregate news articles from various sources on a specific topic for content analysis or to power a news feed. Using a no-code solution like Apify, a content manager can deploy a pre-built Actor or quickly configure a new one to scrape headlines, article bodies, and publication dates from multiple news websites. Apify's cloud infrastructure ensures that the scraping runs reliably and at scale, providing a constant stream of fresh content. This enables the media company to stay updated on industry trends, perform sentiment analysis, or enrich their own content offerings efficiently and without developer intervention.

These case studies underscore the versatility and impact of no-code web scrapers. They demonstrate how these tools are not merely simplifying data extraction but are actively enabling new business models, accelerating research, and democratizing access to valuable web intelligence across various sectors. The ability to perform effortless data extraction is becoming a critical skill in today's digital economy.

Conclusion: Empowering Data Extraction for Everyone

The landscape of web data acquisition has been irrevocably changed by the advent of no-code web scrapers. These innovative tools have shattered the technical barriers that once confined web scraping to the realm of programmers, opening up a world of data possibilities for businesses, researchers, and individuals alike. From visual point-and-click interfaces to advanced AI-driven automation, the 10 best no-code web scrapers highlighted in this guide offer diverse solutions for effortless data extraction from even the most complex and dynamic websites.

Choosing the right no-code tool is a strategic decision that can significantly impact your data collection efficiency and success. Whether you prioritize ease of use, advanced anti-bot capabilities, scalability, or cost-effectiveness, there is a no-code solution tailored to your needs. These tools not only save time and resources but also empower non-technical users to take control of their data needs, fostering a more data-driven culture across organizations. The market trends for 2025 clearly indicate a continued surge in the adoption of these platforms, driven by their accessibility and powerful capabilities.

For those seeking the ultimate in reliability, scalability, and automated handling of all scraping complexities, Scrapeless stands out as a premier choice. It embodies the future of no-code data extraction by intelligently managing JavaScript rendering, proxy rotation, and anti-bot measures, allowing you to focus solely on leveraging the extracted data. Embrace the power of no-code web scrapers to transform your data acquisition process, unlock invaluable insights, and stay ahead in the competitive digital landscape.

Ready to experience truly effortless data extraction?

Frequently Asked Questions (FAQ)

Q1: What is a no-code web scraper?

A: A no-code web scraper is a software tool that allows users to extract data from websites without writing any programming code. It typically uses a visual interface (like point-and-click) to define what data to extract and how to navigate a website, abstracting away the technical complexities of web scraping.

Q2: How do no-code web scrapers handle dynamic content or anti-bot measures?

A: Many advanced no-code web scrapers, especially those that are cloud-based or AI-powered (like Scrapeless), incorporate sophisticated mechanisms to handle dynamic content (JavaScript rendering) and anti-bot measures (IP rotation, CAPTCHA solving, user-agent management) automatically. They simulate a real browser environment to ensure all content is loaded and accessible.

Q3: Are no-code web scrapers suitable for large-scale data extraction?

A: Yes, many no-code web scrapers, particularly enterprise-grade platforms like Scrapeless, Apify, or Bright Data, are designed for scalability. They can handle large volumes of data extraction, often running tasks in the cloud to ensure efficiency and reliability without taxing local resources.

Q4: What are the main limitations of no-code web scrapers?

A: While powerful, no-code web scrapers can have limitations. They might offer less customization than coded solutions, may struggle with extremely unique or complex website structures that deviate significantly from common patterns, and their pricing can become substantial for very high-volume or continuous data needs. However, these limitations are continuously being addressed by new advancements, especially with AI integration.

Q5: Can I integrate data from no-code web scrapers into other applications?

A: Absolutely. Most reputable no-code web scrapers provide various data export options (CSV, JSON, Excel) and often offer API access or webhooks. This allows users to seamlessly integrate the extracted data into databases, analytics tools, CRM systems, or other business applications for further processing and analysis.

At Scrapeless, we only access publicly available data while strictly complying with applicable laws, regulations, and website privacy policies. The content in this blog is for demonstration purposes only and does not involve any illegal or infringing activities. We make no guarantees and disclaim all liability for the use of information from this blog or third-party links. Before engaging in any scraping activities, consult your legal advisor and review the target website's terms of service or obtain the necessary permissions.