How to Handle Dynamic Content with BeautifulSoup? Definitive Guide 2025

Expert Network Defense Engineer

Key Takeaways:

- BeautifulSoup is for static HTML; it cannot execute JavaScript to render dynamic content.

- To scrape dynamic content, combine BeautifulSoup with browser automation tools (Selenium, Playwright) or specialized APIs.

- Browser automation renders the page, allowing BeautifulSoup to parse the full HTML.

- Directly querying APIs is highly efficient when dynamic content originates from known API endpoints.

- Specialized Web Scraping APIs offer a streamlined solution for complex, JavaScript-driven sites.

Introduction

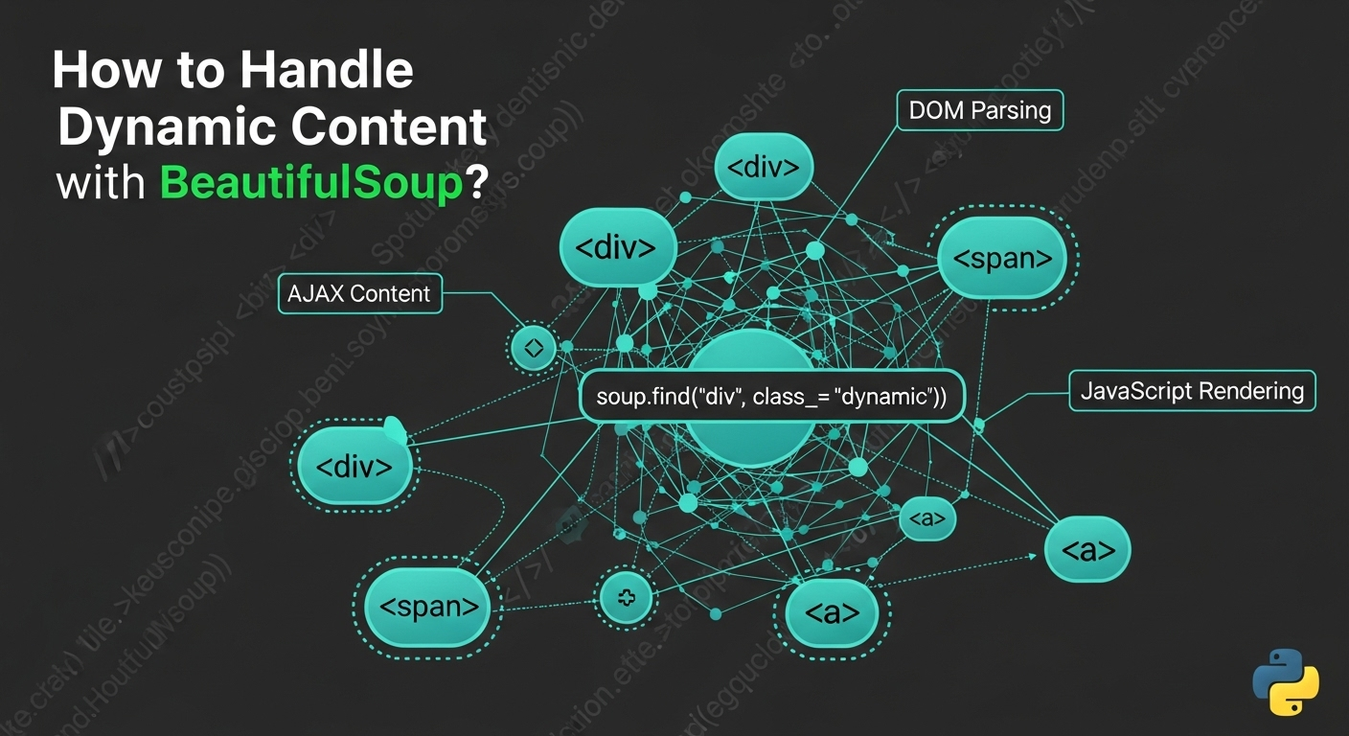

Web scraping often faces a challenge: dynamic content. Modern websites use JavaScript to load data and render elements asynchronously, making content invisible to BeautifulSoup alone. While BeautifulSoup excels at parsing static HTML, it cannot execute JavaScript. This guide explores effective methods to handle dynamic content when using BeautifulSoup, providing practical examples and best practices to extract data from JavaScript-driven websites reliably.

Understanding Dynamic Content and BeautifulSoup's Limitations

Dynamic content refers to web page elements loaded or generated after the initial HTML, typically via JavaScript. Examples include AJAX calls, client-side rendering (React, Angular), and WebSockets. BeautifulSoup is a static parser; it processes only the HTML it receives, lacking a JavaScript engine or rendering capabilities. Thus, it cannot access content generated by JavaScript after the initial page load. To overcome this, BeautifulSoup must be paired with tools that simulate a browser environment.

Solution 1: Combining BeautifulSoup with Selenium

Selenium automates web browsers, executing JavaScript and interacting with web elements. Use it to load a page, allow dynamic content to render, then extract the full HTML for BeautifulSoup to parse.

How it Works:

Selenium launches a browser, navigates to the URL, waits for JavaScript to execute, retrieves the complete HTML source, and then passes it to BeautifulSoup.

Installation:

bash

pip install selenium beautifulsoup4 webdriver_managerPython Code Example (Snippet):

python

from selenium import webdriver

from selenium.webdriver.chrome.service import Service

from webdriver_manager.chrome import ChromeDriverManager

from bs4 import BeautifulSoup

import time

def scrape_dynamic_content_selenium(url):

options = webdriver.ChromeOptions()

options.add_argument(\'--headless\')

driver = webdriver.Chrome(service=Service(ChromeDriverManager().install()), options=options)

driver.get(url)

time.sleep(5) # Adjust delay

html_content = driver.page_source

soup = BeautifulSoup(html_content, \'html.parser\')

# ... extract data with soup ...

driver.quit()Pros & Cons:

- Pros: Full JavaScript execution, browser interaction, widely adopted.

- Cons: Resource-intensive, slower, complex setup, prone to anti-bot detection [1].

Solution 2: Combining BeautifulSoup with Playwright

Playwright is a modern library for controlling Chromium, Firefox, and WebKit browsers. It offers robust waiting mechanisms and is often more performant than Selenium for dynamic content.

How it Works:

Playwright launches a browser, navigates to the URL, waits for content to load, retrieves the full HTML, and then passes it to BeautifulSoup.

Installation:

bash

pip install playwright beautifulsoup4

playwright installPython Code Example (Snippet):

python

from playwright.sync_api import sync_playwright

from bs4 import BeautifulSoup

def scrape_dynamic_content_playwright(url):

with sync_playwright() as p:

browser = p.chromium.launch(headless=True)

page = browser.new_page()

page.goto(url, wait_until="networkidle")

html_content = page.content()

soup = BeautifulSoup(html_content, \'html.parser\')

# ... extract data with soup ...

browser.close()Pros & Cons:

- Pros: Multi-browser support, modern API, fast, auto-waiting.

- Cons: Resource-intensive, requires browser binaries, can be detected by anti-bot systems [2].

Solution 3: Combining BeautifulSoup with Requests-HTML

requests-html extends requests to render JavaScript using a headless Chromium instance, offering a simpler way to handle dynamic content without full browser automation.

How it Works:

requests-html fetches initial HTML, renders JavaScript in the background, and then provides the processed HTML for BeautifulSoup parsing.

Installation:

bash

pip install requests-html beautifulsoup4Python Code Example (Snippet):

python

from requests_html import HTMLSession

from bs4 import BeautifulSoup

def scrape_dynamic_content_requests_html(url):

session = HTMLSession()

r = session.get(url)

r.html.render(sleep=3, keep_page=False)

html_content = r.html.html

soup = BeautifulSoup(html_content, \'html.parser\')

# ... extract data with soup ...

session.close()Pros & Cons:

- Pros: Simpler API, integrated requests and rendering, potentially lighter weight.

- Cons: Less robust for complex JS/anti-bot, Chromium dependency, can be slow for many pages.

Solution 4: Combining BeautifulSoup with Splash

Splash is a scriptable headless browser that runs on a server, ideal for controlled JavaScript rendering, especially with Scrapy.

How it Works:

Your script sends a request to the Splash server, which renders the page and returns the full HTML for BeautifulSoup to parse.

Installation:

Requires Docker to run Splash:

bash

docker run -p 8050:8050 scrapinghub/splashPython Code Example (Snippet):

python

import requests

from bs4 import BeautifulSoup

def scrape_dynamic_content_splash(url, splash_url="http://localhost:8050"):

payload = {

\"url\": url,

\"wait\": 2,

\"html\": 1

}

response = requests.get(f\"{splash_url}/render.html\", params=payload)

html_content = response.text

soup = BeautifulSoup(html_content, \'html.parser\')

# ... extract data with soup ...Pros & Cons:

- Pros: Isolated environment, scriptable, good for Scrapy integration.

- Cons: Complex setup (Docker), performance overhead, resource intensive.

Solution 5: Directly Querying APIs (When Available)

Often, dynamic content is loaded via AJAX requests to a backend API. Directly querying these APIs can be more efficient than browser rendering.

How it Works:

Inspect network traffic in browser dev tools to find API endpoints. Replicate the request (method, headers, payload) using Python's requests library. Parse the JSON/XML response. Optionally, use BeautifulSoup if the API returns HTML snippets.

Installation:

bash

pip install requests beautifulsoup4Python Code Example (Snippet):

python

import requests

import json

from bs4 import BeautifulSoup

def scrape_dynamic_content_api(api_url, headers=None, params=None, data=None):

response = requests.get(api_url, headers=headers, params=params) # or requests.post

response.raise_for_status()

api_data = response.json()

# ... process api_data ...

# If API returns HTML snippets:

# soup = BeautifulSoup(api_data["html_content"], \'html.parser\')

# ... parse with soup ...Pros & Cons:

- Pros: Fast, resource-light, targeted data, less prone to anti-bot.

- Cons: Requires API discovery, vulnerable to API changes, authentication handling, not always available.

Solution 6: Headless Browsers (Standalone)

For lightweight rendering without full automation frameworks, headless browsers like pyppeteer (Python equivalent of Puppeteer) offer programmatic control over a browser to render JavaScript-heavy pages.

How it Works:

A headless browser launches, navigates to the URL, executes JavaScript, extracts the full HTML, which is then passed to BeautifulSoup.

Installation (for pyppeteer):

bash

pip install pyppeteer beautifulsoup4Python Code Example (Snippet):

python

import asyncio

from pyppeteer import launch

from bs4 import BeautifulSoup

async def scrape_dynamic_content_pyppeteer(url):

browser = await launch(headless=True)

page = await browser.newPage()

await page.goto(url, waitUntil=\"networkidle0\")

html_content = await page.content()

soup = BeautifulSoup(html_content, \'html.parser\')

# ... extract data with soup ...

await browser.close()Pros & Cons:

- Pros: Lightweight rendering, modern JavaScript support, fine-grained control.

- Cons: Requires

asyncio, resource consumption, Chromium setup.

Solution 7: Utilizing Web Scraping APIs (Specialized Services)

For complex scenarios, specialized Web Scraping APIs handle browser rendering, JavaScript execution, IP rotation, and anti-bot evasion, returning fully rendered HTML or structured data.

How it Works:

Your script sends a simple HTTP request to the API with the target URL. The API handles all rendering and anti-bot measures, then returns the clean HTML for BeautifulSoup to parse.

Installation:

bash

pip install requests beautifulsoup4Python Code Example (Snippet):

python

import requests

from bs4 import BeautifulSoup

import json

def scrape_dynamic_content_api_service(target_url, api_key, api_endpoint="https://api.scrapeless.com/v1/scrape"):

payload = {

\"url\": target_url,

\"api_key\": api_key,

\"render_js\": True,

}

headers = {\"Content-Type\": \"application/json\"}

response = requests.post(api_endpoint, headers=headers, data=json.dumps(payload))

response.raise_for_status()

response_data = response.json()

html_content = response_data.get(\"html\")

if html_content:

soup = BeautifulSoup(html_content, \"html.parser\")

# ... extract data with soup ...Pros & Cons:

- Pros: Simplicity, high success rate, scalability, efficiency, focus on data.

- Cons: Paid service, external dependency, less control.

Solution 8: Integrating with Scrapy

Scrapy is a high-level web scraping framework. While it doesn't execute JavaScript natively, it can integrate with tools like Splash or Selenium/Playwright via middleware to handle dynamic content, making it suitable for large-scale projects.

How it Works:

Scrapy sends a request, which is intercepted by middleware and forwarded to a JavaScript rendering service. The rendered HTML is returned to Scrapy, which can then be parsed by BeautifulSoup or Scrapy's own selectors.

Installation:

bash

pip install scrapy beautifulsoup4

# For Splash integration: pip install scrapy-splash and run Docker containerPros & Cons:

- Pros: Scalability, robustness, flexibility, good for large-scale projects.

- Cons: Steep learning curve, overhead for simple tasks, JavaScript rendering requires external service.

Solution 9: Using requests_html for Simple JavaScript Rendering

requests_html combines requests with headless Chromium to render JavaScript, offering a simpler approach than full browser automation.

How it Works:

It fetches raw HTML, then renders JavaScript in a headless browser, providing the fully rendered HTML for BeautifulSoup parsing.

Installation:

bash

pip install requests-html beautifulsoup4Python Code Example (Snippet):

python

from requests_html import HTMLSession

from bs4 import BeautifulSoup

def scrape_dynamic_content_requests_html_simple(url):

session = HTMLSession()

r = session.get(url)

r.html.render(sleep=2, keep_page=False)

html_content = r.html.html

soup = BeautifulSoup(html_content, \'html.parser\')

# ... extract data with soup ...

session.close()Pros & Cons:

- Pros: Simplicity, integrated requests/rendering, potentially resource-efficient.

- Cons: Less robust for complex JS/anti-bot, Chromium dependency, can be slow.

Solution 10: Using a Proxy Service with Built-in JavaScript Rendering

Advanced proxy services offer built-in JavaScript rendering, acting as a middleman to return fully rendered HTML while handling proxies, CAPTCHAs, and anti-bot measures.

How it Works:

Your script sends a request to the proxy service, which renders the page with JavaScript and returns the complete HTML for BeautifulSoup parsing.

Installation:

bash

pip install requests beautifulsoup4Python Code Example (Snippet):

python

import requests

from bs4 import BeautifulSoup

import json

def scrape_dynamic_content_proxy_service(target_url, proxy_api_key, proxy_endpoint="https://api.someproxyservice.com/render"):

payload = {

\"url\": target_url,

\"api_key\": proxy_api_key,

\"render_js\": True,

}

headers = {\"Content-Type\": \"application/json\"}

response = requests.post(proxy_endpoint, headers=headers, data=json.dumps(payload))

response.raise_for_status()

response_data = response.json()

html_content = response_data.get(\"html\")

if html_content:

soup = BeautifulSoup(html_content, \"html.parser\")

# ... extract data with soup ...Pros & Cons:

- Pros: Simplified infrastructure, integrated solutions (JS rendering, anti-bot), scalability, ease of use.

- Cons: Paid service, external dependency, less control.

Comparison Summary: Solutions for Dynamic Content with BeautifulSoup

| Solution | Complexity (Setup/Maintenance) | Cost (Typical) | Performance | Robustness (Anti-Bot) | Best For |

|---|---|---|---|---|---|

| 1. BeautifulSoup + Selenium | Medium to High | Low (Free) | Moderate | Low to Medium | Complex interactions, testing, small to medium-scale scraping |

| 2. BeautifulSoup + Playwright | Medium | Low (Free) | Good | Low to Medium | Modern web apps, multi-browser testing, small to medium-scale scraping |

| 3. BeautifulSoup + Requests-HTML | Low to Medium | Low (Free) | Moderate | Low | Simple dynamic pages, quick scripts, less complex JS rendering |

| 4. BeautifulSoup + Splash | High (Docker) | Low (Free) | Moderate | Medium | Scrapy integration, isolated rendering, complex JS, large-scale projects |

| 5. Directly Querying APIs | Low (Discovery) | Low (Free) | High | High (if API stable) | Structured data from known APIs, high-speed, resource-efficient |

| 6. BeautifulSoup + Headless Browsers (e.g., Pyppeteer) | Medium | Low (Free) | Good | Low to Medium | Simple JS rendering, programmatic browser control, less overhead than full frameworks |

| 7. BeautifulSoup + Web Scraping APIs | Low | Medium to High | Very High | Very High | Large-scale, complex sites, anti-bot evasion, high reliability |

| 8. Scrapy Integration (with Splash/Selenium) | Very High | Low (Free) | High | Medium to High | Enterprise-grade, large-scale crawling, robust data pipelines |

9. requests_html (standalone) |

Low | Low (Free) | Moderate | Low | Quick scripts, basic JS rendering, Pythonic approach |

| 10. Proxy Service with JS Rendering | Low | Medium to High | High | High | Offloading infrastructure, anti-bot, medium to large-scale scraping |

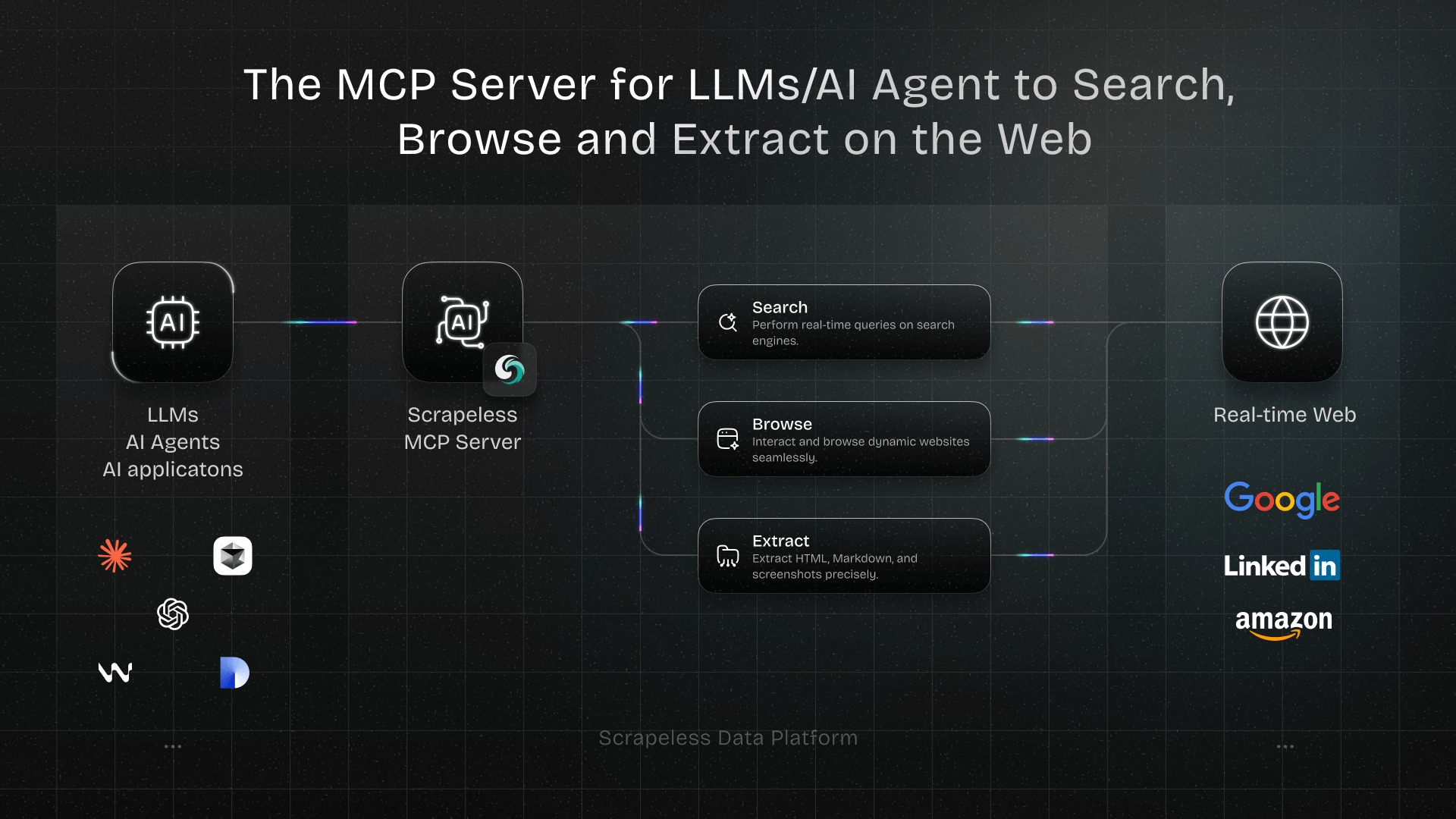

Why Scrapeless is Your Best Alternative

While BeautifulSoup is excellent for parsing HTML, handling dynamic content often adds significant complexity. This is where a specialized Web Scraping API like Scrapeless offers a streamlined and robust solution. Scrapeless abstracts away the challenges of JavaScript rendering, IP rotation, and anti-bot evasion, allowing you to focus purely on data extraction.

How Scrapeless Simplifies Dynamic Content Scraping:

- Automated JavaScript Rendering: Scrapeless automatically executes all JavaScript, ensuring dynamic content from AJAX, client-side frameworks, or WebSockets is fully rendered. No need to manage headless browsers.

- Built-in Anti-Bot and CAPTCHA Bypass: It integrates advanced evasion techniques, including intelligent IP rotation, browser fingerprinting, and CAPTCHA solving, to bypass sophisticated anti-bot systems seamlessly.

- Simplified Integration: Your Python script makes a simple HTTP request to the Scrapeless API. The API handles all the heavy lifting, returning clean, fully rendered HTML for BeautifulSoup to parse, significantly reducing your codebase.

- Scalability and Reliability: Designed for enterprise-grade data extraction, Scrapeless offers unparalleled scalability and high uptime, without you managing infrastructure, proxies, or browser instances.

- Cost-Effectiveness: While a premium service, Scrapeless often proves more cost-effective than building and maintaining custom dynamic scraping solutions, saving development time and resources.

By integrating Scrapeless, you transform dynamic content scraping into an efficient process, leveraging BeautifulSoup's parsing strengths without the complexities of JavaScript rendering and anti-bot measures.

Conclusion and Call to Action

Handling dynamic content with BeautifulSoup requires moving beyond its static parsing capabilities. Various solutions exist, from pairing BeautifulSoup with browser automation tools like Selenium and Playwright, to leveraging specialized services like Splash or directly querying APIs. Each method offers distinct advantages and trade-offs.

For developers tackling modern, JavaScript-heavy websites, the choice depends on project scale, dynamic content complexity, and anti-bot evasion needs. While self-managed browser automation offers control, it comes with significant overhead and maintenance.

For an efficient, scalable, and hassle-free approach, a dedicated Web Scraping API like Scrapeless stands out. By offloading the complexities of JavaScript rendering, IP rotation, and anti-bot bypass, Scrapeless allows you to maximize BeautifulSoup's parsing power without infrastructure management. It enables reliable data extraction from challenging dynamic websites.

Ready to simplify your dynamic web scraping?

Don't let dynamic content be a barrier to your data extraction goals. Explore how Scrapeless can streamline your workflow and provide reliable access to the web data you need. Start your free trial today and experience the future of web scraping.

Start Your Free Trial with Scrapeless Now!

Frequently Asked Questions (FAQ)

Q1: Why can't BeautifulSoup directly handle dynamic content?

BeautifulSoup is a static HTML parser; it lacks a JavaScript engine and rendering capabilities. It cannot execute JavaScript code that loads additional content or modifies the DOM, so dynamic content generated post-initial load is invisible to it.

Q2: Is it always necessary to use a headless browser for dynamic content?

Not always. If dynamic content comes from a discoverable API, directly querying that API with requests is more efficient. However, for complex JavaScript interactions, client-side rendering, or hidden APIs, a headless browser or specialized scraping API becomes necessary.

Q3: What are the main trade-offs between Selenium/Playwright and Web Scraping APIs?

Selenium/Playwright: Offers full control, free (excluding infra), good for testing. Consumes resources, slower, complex setup, prone to anti-bot detection, high maintenance.

Web Scraping APIs: Highly efficient, abstracts complexities (JS rendering, proxies, anti-bot), scalable, reliable. Paid service, less granular control, external dependency.

Choice depends on project scale, budget, and desired control vs. convenience.

Q4: How can I identify if a website uses dynamic content?

- Disable JavaScript: If content disappears, it's dynamic.

- Browser Dev Tools (Network tab): Look for XHR/Fetch requests loading data after initial HTML.

- View Page Source vs. Inspect Element: If 'Inspect Element' shows more content, it's dynamic.

Q5: Can I use BeautifulSoup for parsing the HTML returned by a Web Scraping API?

Yes, this is highly recommended. Web Scraping APIs return fully rendered, static HTML, which BeautifulSoup is perfectly designed to parse. This combines robust content access with flexible data extraction.

References

[1] ZenRows: Selenium Anti-Bot Bypass

[2] Playwright: Best Practices

At Scrapeless, we only access publicly available data while strictly complying with applicable laws, regulations, and website privacy policies. The content in this blog is for demonstration purposes only and does not involve any illegal or infringing activities. We make no guarantees and disclaim all liability for the use of information from this blog or third-party links. Before engaging in any scraping activities, consult your legal advisor and review the target website's terms of service or obtain the necessary permissions.