Using MCP server and Cursor to build an e-commerce analysis assistant

Advanced Data Extraction Specialist

As the global e-commerce market continues to grow, it is expected to reach $4.8 trillion by 2025. In order to stand out in a highly competitive environment, e-commerce merchants need to rely on accurate data analysis. Using MCP Server and Claude to build an e-commerce analysis assistant can help merchants efficiently capture market data and analyze trends, thereby optimizing decisions and improving competitiveness.

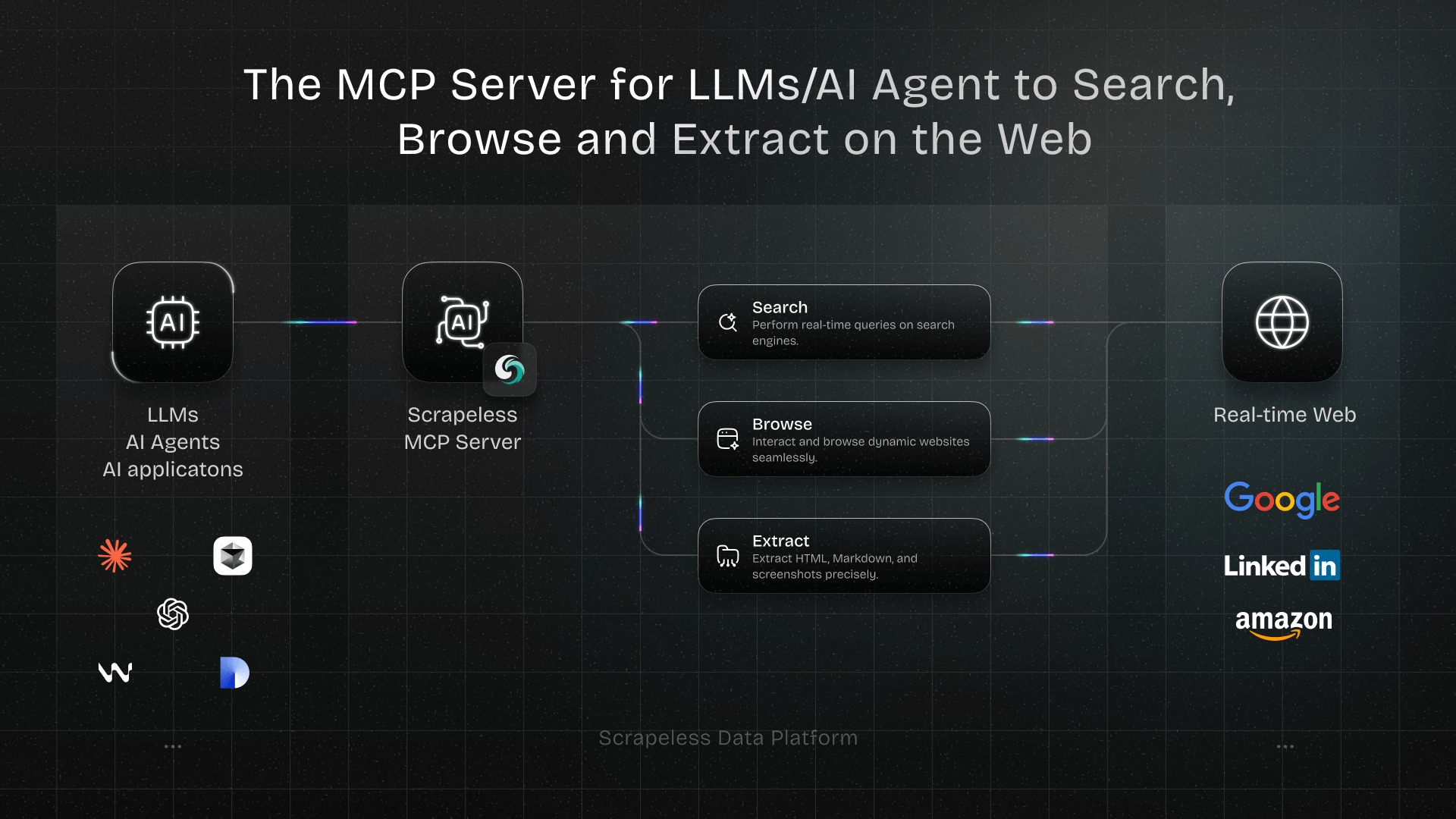

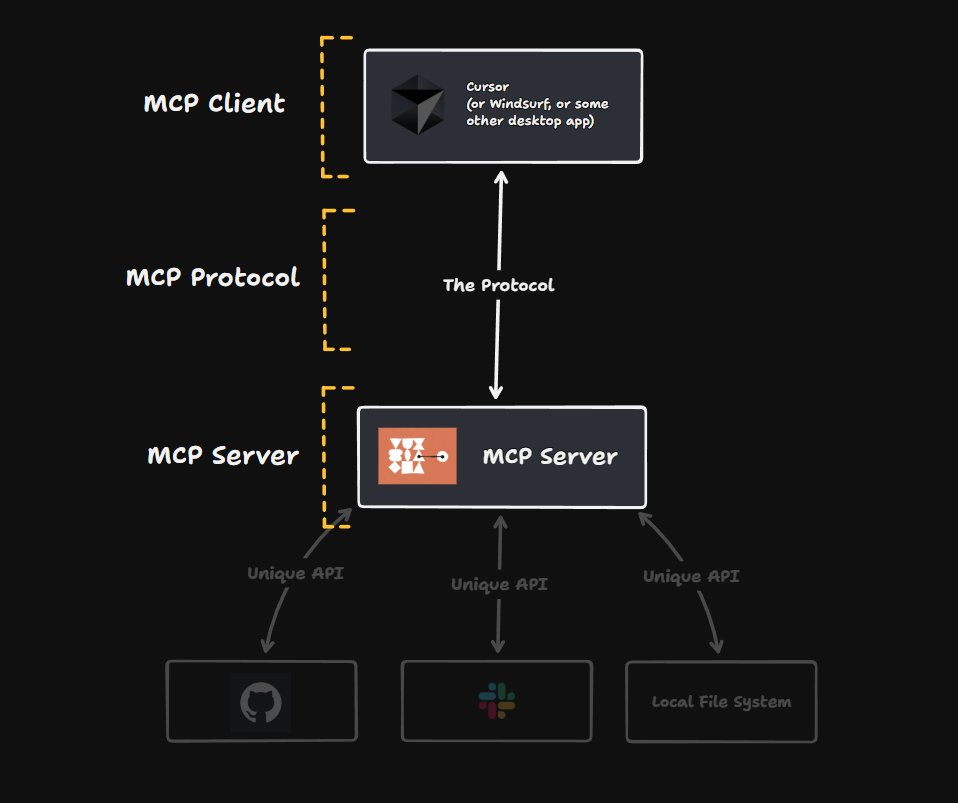

What is MCP and why use it?

The Model Context Protocol (MCP) is an open standard that allows AI assistants like Claude to interact with a variety of data sources and tools. Key benefits include:

- Universal access: Query and retrieve data from multiple sources using a single protocol.

- Secure, standardized connections: Handles authentication and data formats, eliminating the need for ad-hoc API connectors.

- Sustainable ecosystem: Reusable connectors simplify development across multiple LLMs.

How to Configure Scrapeless MCP Server on Cursor

1. Install Node.js and npm :

- If you haven't installed Node.js and npm yet, first you need to download and install the latest stable version from the Node.js official website.

- After installation, enter the following command in the end point to check if the installation is successful:

language

node -v

npm -v- If the installation is successful, it will display version information similar to the following:

language

v22.x.x

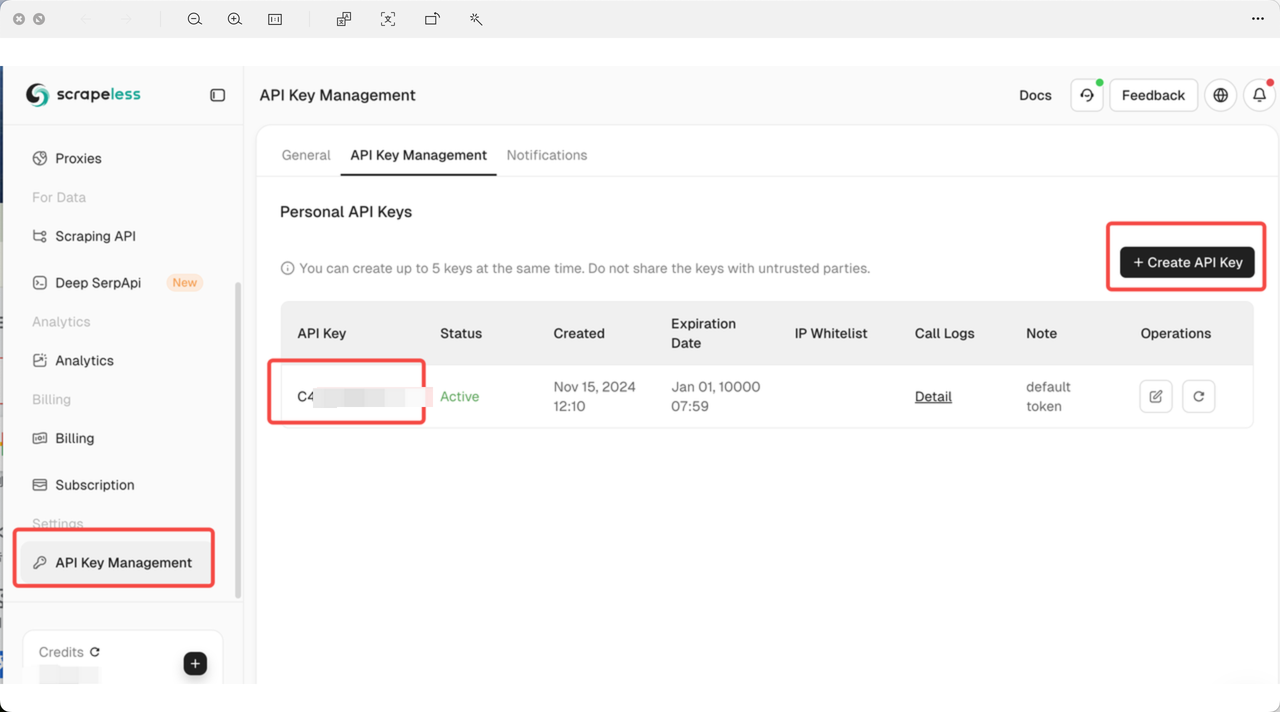

10.x.x2. Get the Scrapeless Key

Register and log in to Scrapeless Dashboard, you can get a free trial. Then click "API Key Management" to create your Scrapelees API Key.

3. Configuring Scrapeless in Cursor

3.1 Download and install the Cursor desktop app

3.2 Configure Scrapeless MCP Server in Cursor

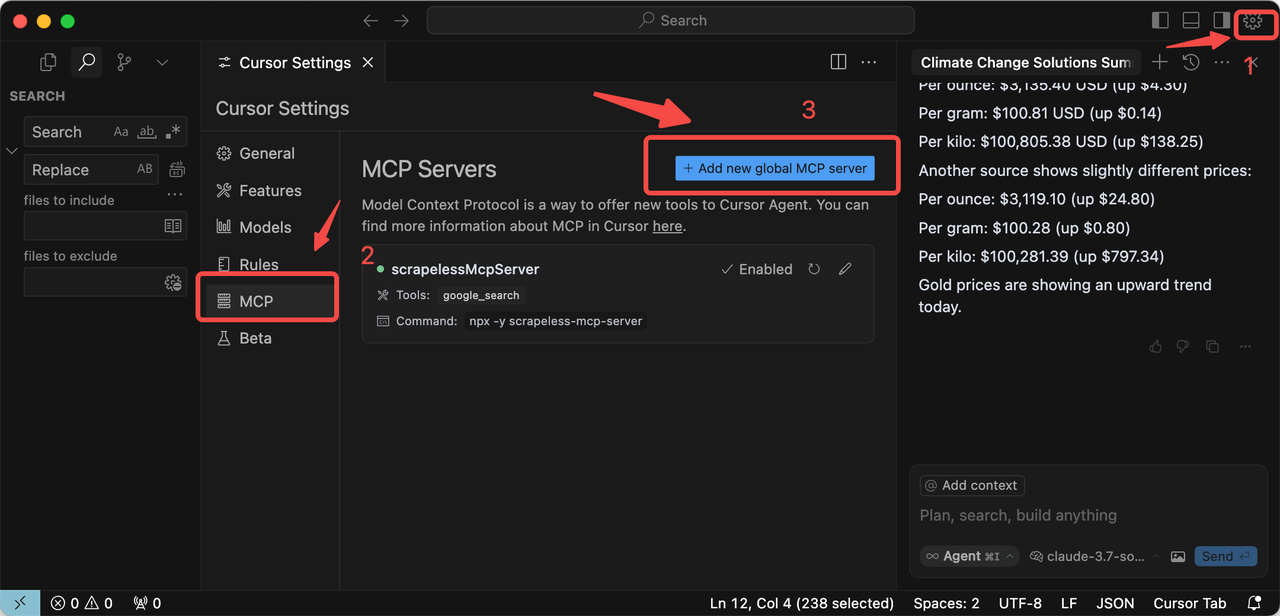

- Click"Settings", find the left menu "MCP Options"

- Click "Add new global MCP server" to jump to the configuration box.

- Configuration Scrapeless

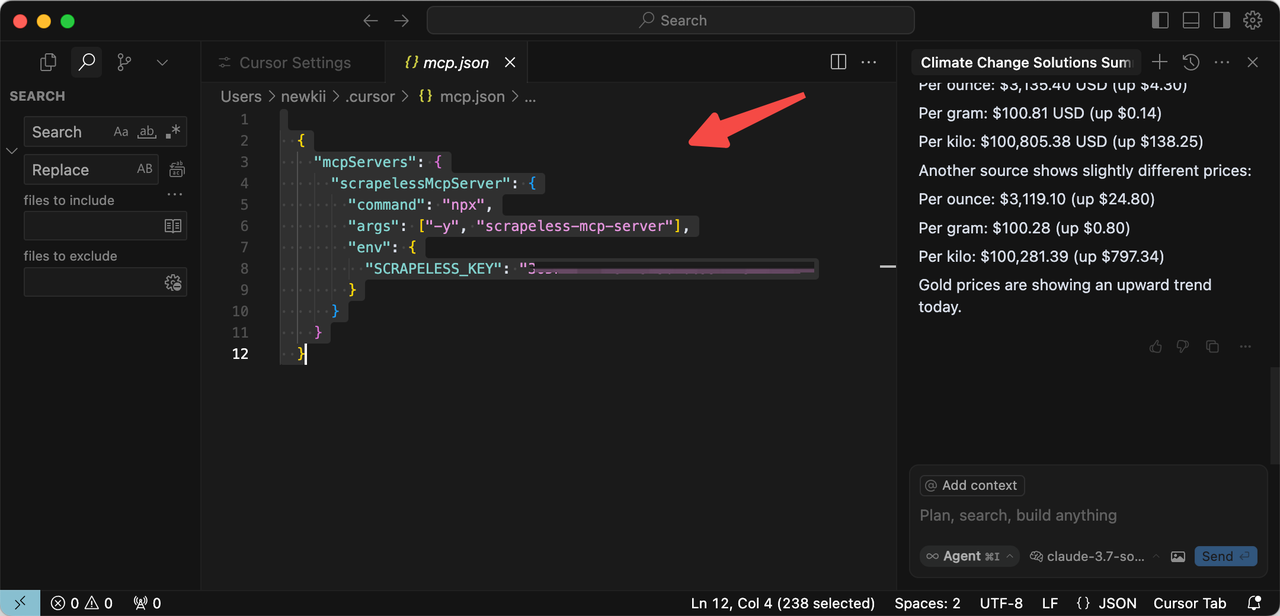

You need to enter the following configuration code into Cursor, replacing "YOUR_SCRAPELESS_KEY" with the Scrapeless API Key you just obtained.

{

"mcpServers": {

"scrapelessMcpServer": {

"command": "npx",

"args": ["-y", "scrapeless-mcp-server"],

"env": {

"SCRAPELESS_KEY": "YOUR_SCRAPELESS_KEY"

}

}

}

}

4. Use Cursor

Now you have successfully configured the Scrapeless MCP Server. Simply enter the command in the dialog box to call Scrapeless for information.

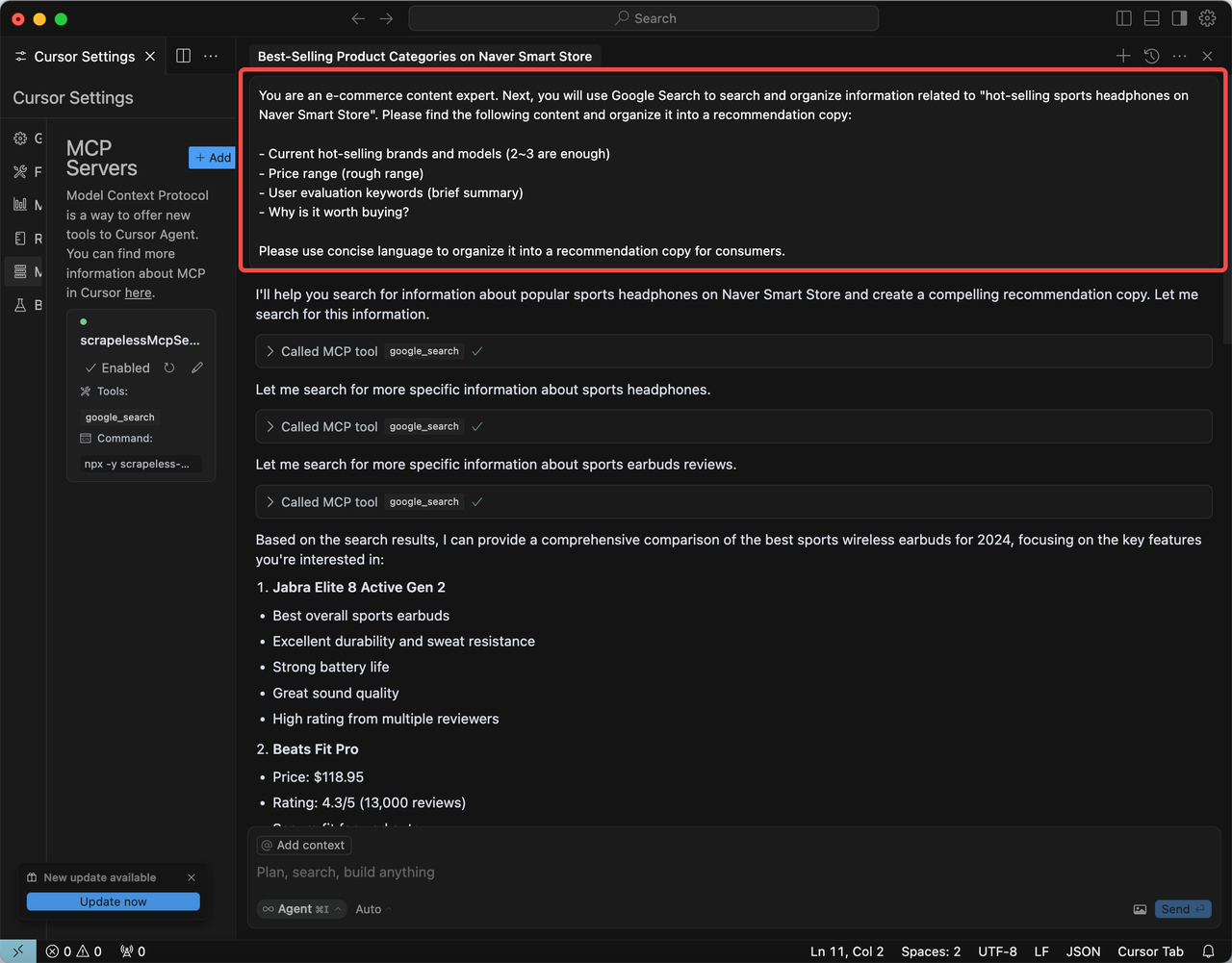

Query command:

You are an e-commerce content expert. Next, you will use Google Search to search and organize information related to "hot-selling sports headphones on Naver Smart Store". Please find the following content and organize it into a recommendation copy:

- Current hot-selling brands and models (2~3 are enough)

- Price range (rough range)

- User evaluation keywords (brief summary)

- Why is it worth buying?

Please use concise language to organize it into a recommendation copy for consumers.

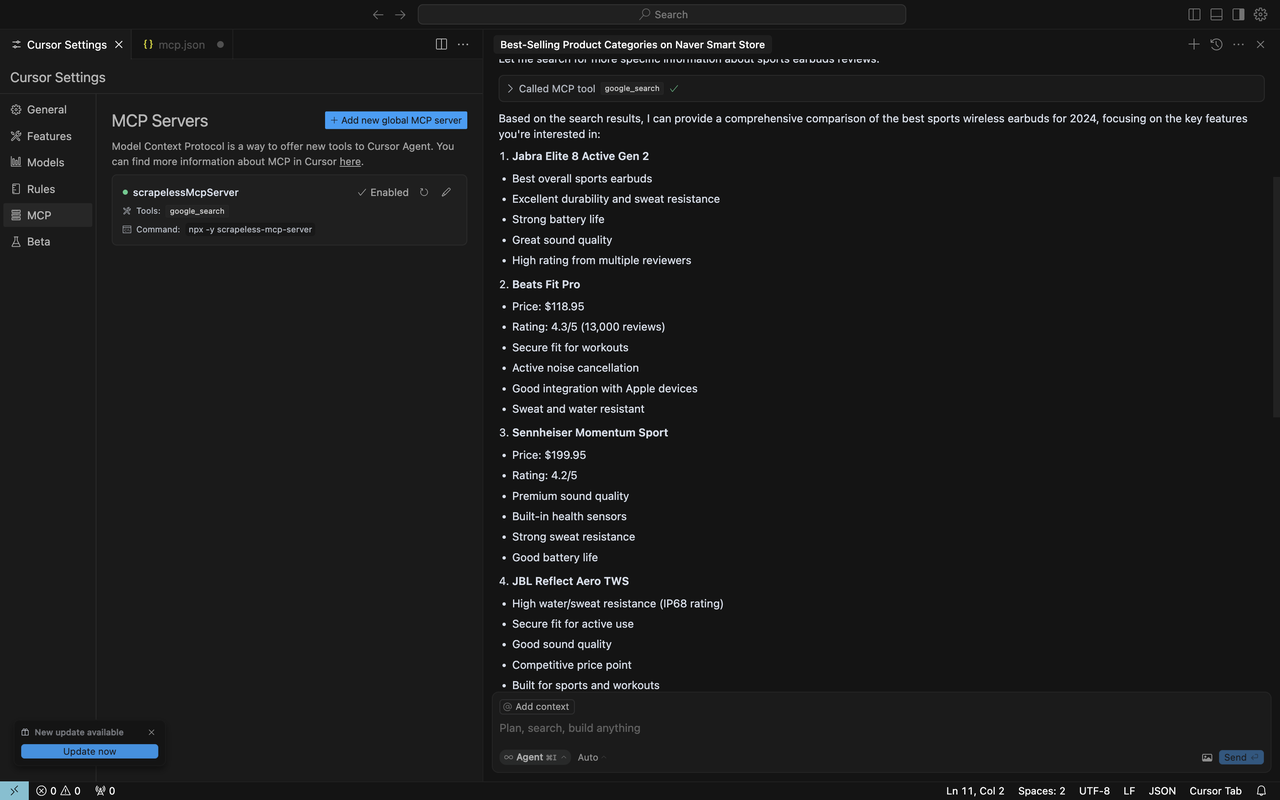

You can see that Cursor has successfully called Scrapeless MCP Server and output the correct answer.

Through the above process, we have successfully built an intelligent and efficient e-commerce analysis assistant. It can not only quickly analyze massive amounts of data, but also provide deep insights to help e-commerce teams make smarter business decisions. As cross-border e-commerce continues to grow, this solution based on flexible integration of AI and protocols will become an important part of future retail intelligence. If you also want to build your own intelligent analysis assistant, now is the best time.

At Scrapeless, we only access publicly available data while strictly complying with applicable laws, regulations, and website privacy policies. The content in this blog is for demonstration purposes only and does not involve any illegal or infringing activities. We make no guarantees and disclaim all liability for the use of information from this blog or third-party links. Before engaging in any scraping activities, consult your legal advisor and review the target website's terms of service or obtain the necessary permissions.